I've heard this quote before, but it has been in my mind this last week or so since I've been on the phones supporting customers:

"Computer programming today is a race between software engineers, striving to build bigger and better 'idiot-proof' programs, and the Universe, trying to produce bigger and better idiots. So far, the Universe seems to be winning."

I also read

another blog about user interfaces in which he talks about the fact that computer interfaces are designed with beginners in mind. However, once the user gets over the learning curve, the interface is a crutch. He asks a rather tough question: "So is it possible to design a system that's suits both beginners and professionals?" No easy answer for that. We could probably all rant about how certain implementations fail, but do we have anything better to offer? (For all my GNU/Linux snobbery, I must admit the problems with the two major open-source interfaces:

GNOME is too simple and

KDE too complex.)

As for "idiots", I don't really mean it. The same could be said for everyone driving a car: I certainly don't know very well how to diagnose the internal workings when something goes wrong. Yet I do appreciate a working knowledge of things. And I can certainly empathize that the trend (rather frustrating for developers) in computing seems to be trying to completely diminish the learning curve, and as usual, that makes most of the usefulness of computing null and void. (If you don't know how to use a mouse or to save a file, you won't be very productive.) Does that mean the learning curve should be a bit higher and computers demand a bit more knowledge before using them? Or does that mean we should all invest in our local computer education center?

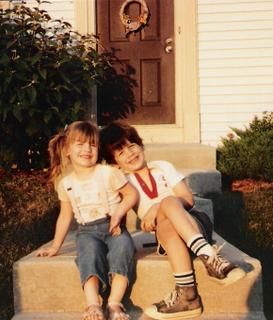

So I've been doing a lot of familial reminiscing in the past few weeks, plus I just figured out how to add images on Blogger, so here's one of my sister and me, circa 1987. This is a particularly dear picture to me.

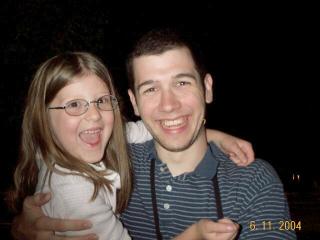

So I've been doing a lot of familial reminiscing in the past few weeks, plus I just figured out how to add images on Blogger, so here's one of my sister and me, circa 1987. This is a particularly dear picture to me. And here's one last year of my littlest sister and me. She isn't quite so little anymore; she just turned 9 in July!

And here's one last year of my littlest sister and me. She isn't quite so little anymore; she just turned 9 in July!